Adopting Explainable AI (XAI)

by Dhika Prameswari, Nur Hotimah Yusof

The incorporation of artificial intelligence (AI) within the realms of pharmaceuticals and healthcare has heralded a transformative epoch characterized by innovation and substantial potential for groundbreaking progress. As artificial intelligence (AI) persistently reshapes industries worldwide, AI-driven algorithms have exhibited extraordinary proficiency in areas such as manufacturing, drug discovery, and disease forecasting. Nonetheless, the escalating intricacy of AI models engenders considerable obstacles pertaining to transparency, interpretability, and adherence to regulatory standards.

This is where the concept of Explainable AI (XAI) becomes pertinent. By offering insights into how AI models make decisions, XAI bridges the gap between sophisticated algorithms and the transparency required by regulators. Techniques such as SHAP and LIME, are gaining traction as essential tools for ensuring that AI-driven processes maintain accountability and regulatory compliance. In this article, we explore the significance of adopting XAI in the pharmaceutical industry, focusing on how it can help meet regulatory demands, foster trust, and drive innovation.

Importance of Transparency and Interpretability

In regulated industries like pharmaceuticals and healthcare, transparency in AI models is critical for both regulatory compliance and building trust with stakeholders. AI models, often perceived as “black boxes” due to their complexity, must offer clear and understandable decision-making processes to meet regulatory standards and ensure patient safety.

- Regulatory Compliance: Regulatory agencies, such as the FDA, EMA, and ICH, require that all decisions affecting product safety, quality, and efficacy are fully documented and explainable. This includes AI-driven processes involved in drug development, manufacturing, and quality assurance.

- Building Trust: Transparent AI models foster trust by providing stakeholders with insights into how decisions are made, ensuring that the logic behind critical outcomes is accessible and understandable. This transparency reduces uncertainty and enhances the acceptance of AI in clinical and operational settings.

- Accountability and Traceability: Explainable AI (XAI) techniques, such as SHAP and LIME, help ensure that AI models can be held accountable for their outputs. These methods allow stakeholders to trace the decision-making process, providing a clear explanation of why certain predictions or recommendations were made.

Techniques for Explainability in AI

Explainable AI (XAI) provides transparent artificial intelligence models that not only generate precise predictions but also furnish valuable insights into the underlying decision-making frameworks. It employs methodologies such as rule-based systems, feature importance assessment, good judgment-based total structures, quantitative metrics, visualizations, and model-agnostic interpretability strategies. These methodologies facilitate stakeholder comprehension of artificial intelligence recommendations and augment the decision-making mechanism. SHAP and LIME represent fundamental model-agnostic techniques that elucidate predictions across diverse artificial intelligence model architectures. They enhance transparency by articulating the justifications for AI-driven decisions, thereby rendering them more intelligible to users.

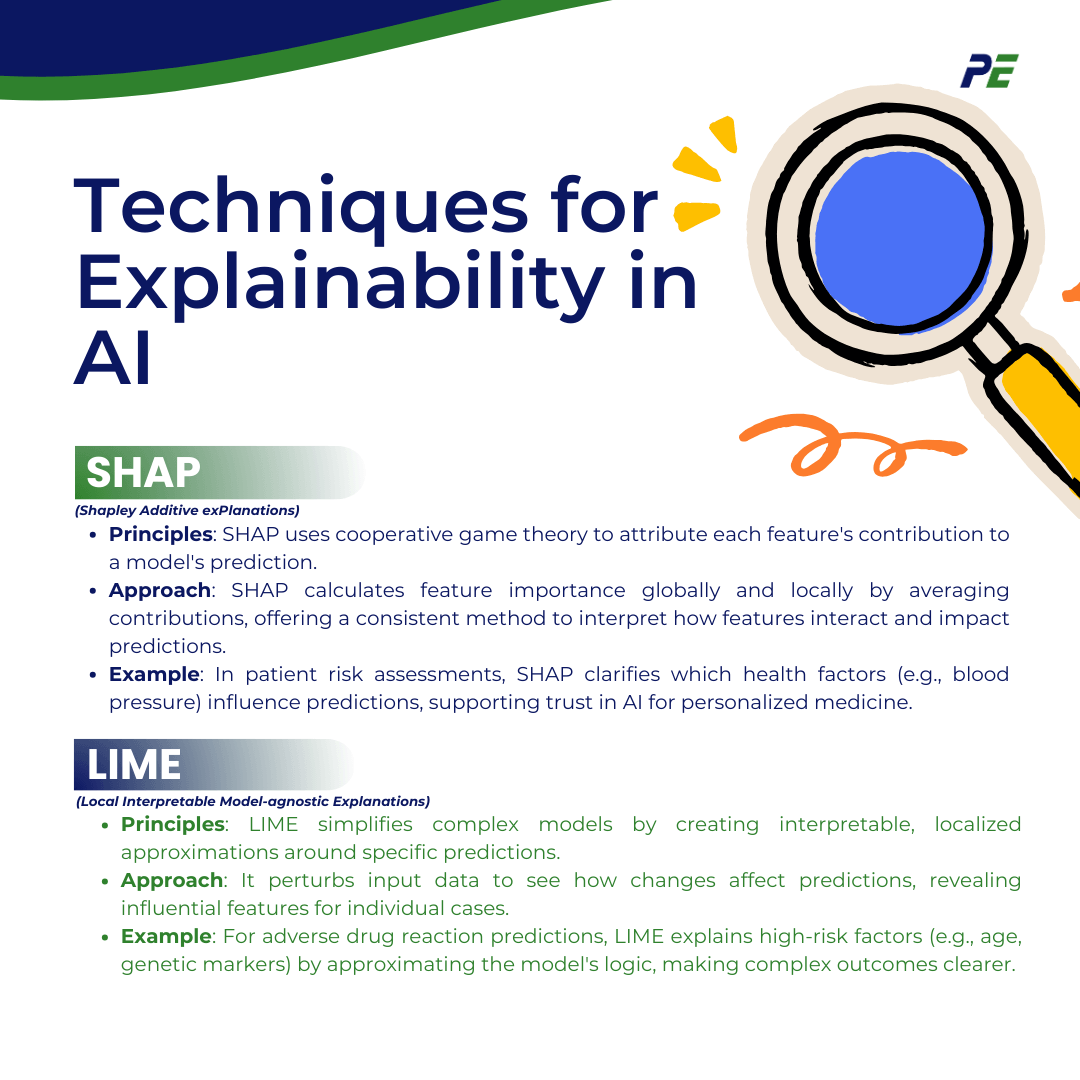

- SHAP (Shapley Additive exPlanations)

- Principles: SHAP is based on cooperative game theory, where it treats features as “players” in a game, and each player’s contribution to the final prediction is calculated. A powerful method for explaining individual AI model predictions is quantifying the contribution of each input feature to the output. This ensures that the importance of each feature is calculated considering all possible combinations of features.

- Approach: The beauty of SHAP lies in its ability to provide a global and local explanation by calculating the average contribution of each feature across all possible feature combinations. It offers a consistent and theoretically grounded method for feature importance, allowing for a comprehensive understanding of how features interact and contribute to predictions. This helps users understand which features influenced a particular decision and to what extent, providing a clear and mathematically sound explanation of how and why the model made a certain prediction.

- Example: For example, SHAP can show how different features contribute to a patient’s risk assessment for a certain medical condition. In clinical trial data analysis, a complex AI model may predict patient outcomes based on various health indicators. Using SHAP, researchers can interpret which factors (e.g., blood pressure or cholesterol levels) contributed most to a specific patient’s predicted outcome, enhancing the trust in AI-driven insights for personalized medicine.

- LIME (Local Interpretable Model-agnostic Explanations)

- Principles: LIME is designed to make complex AI models, like neural networks or ensemble methods, more understandable by approximating their decision-making process with simpler, interpretable models in the local region around a specific prediction. Instead of trying to explain the entire model, LIME focuses on individual predictions, generating interpretable models (like linear regressions) that approximate the decision boundary for a particular instance. It assumes that the model behaves linearly in this local region.

- Approach: LIME perturbs the input data around the instance of interest and observes the changes in predictions. By fitting a simple model to these perturbed instances, it identifies which features are most influential for that specific prediction, providing insights that are tailored to individual cases.

- Example: Machine learning models predict the likelihood of adverse drug reactions (ADRs) using complex algorithms that can be difficult to interpret. LIME helps by explaining individual predictions through local approximations of the model. It perturbs the patient’s data and fits a simpler model to approximate the original prediction. This method reveals which features, such as age, previous drug reactions, or genetic markers, contributed most to the high-risk prediction.

Regulatory Implications of Explainable AI

The regulatory implications of Explainable AI (XAI) in the pharmaceutical industry are significant, particularly in enhancing transparency, accountability, and trust in AI-driven medical applications. As AI technologies evolve, regulatory frameworks must adapt to address unique challenges posed by these systems such as the U.S. Food and Drug Administration (FDA) and the European Medicines Agency (EMA).

- AI-Specific Regulatory Frameworks: Dedicated regulatory frameworks should be established specifically for AI technologies in pharmaceuticals and medical devices. These frameworks would address unique challenges posed by AI, such as algorithm transparency and data integrity.

- Dynamic Approval Processes: The guidelines might include dynamic approval processes that allow for continuous monitoring and real-time data analysis of AI-enabled products post-market. This would ensure ongoing safety and efficacy as AI systems learn and adapt over time.

- Standardization of In Silico Trials: There could be standardized protocols for conducting in silico trials, ensuring consistency and reliability in how AI models are validated and used in drug development and device testing.

- Ethical Considerations: New guidelines may also encompass ethical considerations related to AI, such as bias in algorithms and patient privacy, ensuring that AI applications in healthcare are equitable and respect patient rights.

- Collaboration with AI Developers: Regulatory bodies might promote collaboration with AI developers to create best practices and guidelines that facilitate innovation while maintaining regulatory compliance, fostering a cooperative environment between regulators and the tech industry.

- XAI’s Role in Ethical and Legal Accountability: Explainable AI (XAI) plays a vital role in meeting ethical and legal obligations for accountability in AI-driven processes. Regulations often demand that organizations not only explain how AI systems work but also demonstrate accountability in how these systems are designed, trained, and deployed.

-

- Ethical Accountability: XAI ensures AI systems are free from biases that could lead to unfair or discriminatory outcomes, supporting fairness and ethical decision-making. By making AI decisions transparent, organizations can demonstrate that their models are not perpetuating hidden biases and are aligned with ethical standards, ensuring that AI systems operate in a socially responsible manner.

-

- Legal Accountability: Many regulations require that businesses maintain control and responsibility over their AI systems. XAI supports this by providing a clear audit trail of how decisions were made, which is essential in cases of disputes, audits, or regulatory inspections. This traceability ensures that AI decisions can be explained, and justified, enabling organizations to comply with legal obligations regarding transparency, fairness, and accountability.

Bridging the Gap Between AI Complexity and Compliance

Sophisticated AI models such as deep learning and neural networks, excel at solving complex problems, but they often operate as “black boxes,” meaning their internal workings are difficult to interpret. This creates a major challenge in regulated industries like pharmaceuticals and healthcare, where transparency is essential for ensuring fairness, accountability, and compliance. The main challenges include:

-

- Complexity vs. Interpretability: Advanced AI models are highly complex with millions of parameters, making it hard to explain how specific decisions are made. This complexity can lead to opacity, which is problematic when regulators demand clear explanations for critical decisions, such as patient diagnoses or drug efficacy evaluations.

- Trust and Accountability: Without transparency, it is difficult to trust AI outputs, especially when decisions impact health, safety, or personal data. Stakeholders, including regulators, developers, and end-users, need to understand the reasoning behind AI-driven decisions to ensure that these are fair, reliable, and unbiased.

- Regulatory Scrutiny: In industries where adherence to strict regulatory compliance is mandatory, opaque AI models risk rejection or delayed approvals, as regulatory bodies may be reluctant to approve systems that they cannot fully comprehend or scrutinize.

Explainable AI (XAI) techniques assist in addressing these obstacles by rendering AI outputs more interpretable, thus fostering trust and enabling more efficient regulatory approvals.

-

- Bridging the Gap Between Complexity and Clarity: XAI tools like SHAP and LIME provide insights into how AI models reach their conclusions by attributing importance to specific features or input data. This allows developers to balance sophisticated algorithms with the need for clear, understandable explanations of model behavior, making even complex AI systems more transparent.

- Regulatory Acceptance: XAI enables regulators to understand how AI systems function, increasing their confidence in approving such systems. By providing interpretable outputs, developers can explain how the model’s decisions align with regulatory standards, hence easing the approval process. For instance, in the pharmaceutical industry, AI models used in drug discovery or clinical trials must meet stringent safety and efficacy requirements. With XAI, regulators can trace decisions back to specific data points, ensuring that the model operates according to the established guidelines.

- Building Trust with Stakeholders: XAI enhances trust by making AI decisions explainable to a broader audience, including regulators, healthcare professionals, and patients. When stakeholders can clearly see how and why an AI system made a specific decision, they are more likely to trust the system and its outcomes. This transparency is critical for gaining acceptance and ensuring that AI-driven technologies are used responsibly in regulated industries.

Conclusion

Conclusion

In conclusion, integration of Explainable AI (XAI) is essential for bridging the gap between complex AI models and their decision-making mechanisms. Techniques such as SHAP and LIME facilitate the elucidation of AI decisions, increasing trust and accountability. With regulatory bodies like the FDA and EMA prioritizing transparency, reliability, and interpretability, XAI enhances understanding, compliance, and acceptance, ultimately facilitating more efficient regulatory approvals.

Partnering with PharmEng Technology

As AI continues to reshape the pharmaceutical and healthcare sectors, organizations are under increasing pressure to innovate and comply with stringent regulatory requirements. At PharmEng Technology, we understand that the complexity of AI models—particularly in regulated industries like pharmaceuticals—can pose significant challenges. That’s why integrating Explainable AI (XAI) is no longer just an option, but a strategic necessity. With XAI, we bridge the gap between cutting-edge AI technologies and the transparency required for regulatory approval, ensuring that your processes remain accountable, interpretable, and compliant.

Ready to Transform Your AI Operations?

Join the growing number of forward-thinking organizations streamlining their AI initiatives while maintaining compliance. Contact us today at info.asia@pharmeng.com or fill out our quick form here to schedule a free assessment. Let PharmEng Technology guide you through the complexities of AI and regulatory demands, so you can confidently innovate, achieve faster approvals, and drive your business forward with agility and trust.

About PharmEng Technology

PharmEng Technology is a global consulting firm specializing in pharmaceutical engineering, regulatory affairs, and compliance. With a commitment to quality and innovation, PharmEng Technology provides comprehensive solutions to meet the evolving needs of the pharmaceutical and biotechnology industries.

Contact Information

PharmEng Technology

Email: info.asia@pharmeng.com